One of the more interesting nets to me are Generative Adversarial Networks (GANs) which are two (or more) connected networks that fight to win in a game to "outsmart" the other network. I've written about synthetic face generation before, and those applications use GANs. One network in the GAN learns to distinguish between real faces and synthetic faces and is called the discriminative network. The other network learns to generate synthetic faces and, not surprisingly, is called the generative network. The generative network is "rewarded" when a synthetic face is so realistic that it fools the discriminative network and "punished" when the discriminative network correctly identifies that the face is synthetic and not real. And when the generative network is rewarded, the discriminative network is punished and vice-versa. The two networks are locked in this zero sum win at all costs struggle, each trying to be rewarded and avoid punishment. If the GAN is set up correctly (being correct is mostly guesswork and trial and error), it can provide really impressive results as with the case of the synthetic faces.

But deception is an inherent part of the generative network. After all, it's designed to try an fool the discriminative network and ultimately us humans. Recently, a generative network went well past the bounds of deception expected by its creators. The application is this: transform aerial images into street maps and back to automate much of the image processing for things like google maps.

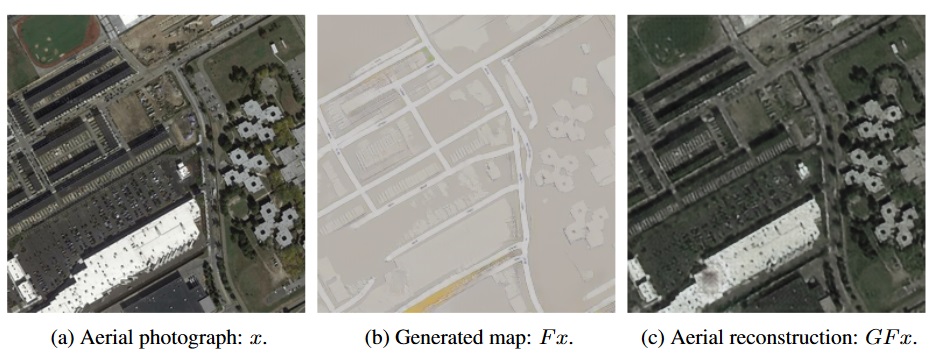

The above images show the process. There's the original aerial photograph (a), the street view (b), and the synthetic aerial view (c) that's reconstructed ONLY from the street view (b).

But wait! Looking at image (c), which is constructed from ONLY image (b), how on earth did it guess where to put the air conditioning units on the long white build? Or the trees? None of those details are in the street view image (b), right?

It turns out that the network "decided" to cheat:

It learned how to subtly encode the features of one into the noise patterns of the other. The details of the aerial map are secretly written into the actual visual data of the street map: thousands of tiny changes in color that the human eye wouldn’t notice, but that the computer can easily detect.In other words, the street view map has gazillions of minute variations that aren't visible to the human eye that encode the data required for the remarkable aerial reconstructions.

In fact, the computer is so good at slipping these details into the street maps that it had learned to encode any aerial map into any street map! It doesn’t even have to pay attention to the “real” street map — all the data needed for reconstructing the aerial photo can be superimposed harmlessly on a completely different street map...

This practice of encoding data into images isn’t new; it’s an established science called steganography, and it’s used all the time to, say, watermark images or add metadata like camera settings. But a computer creating its own steganographic method to evade having to actually learn to perform the task at hand is rather new.Note the last sentence. The generative network wasn't very good at generating the reconstructed aerial view the way it was supposed to. So instead, it figured out how to encode the data it needed so it didn't have to learn how to do it the right way.

The thing I find most interesting is the emergent deception. Nobody predicted this would happen (since it wasn't a desired result) and I don't think anybody could've predicted it.

We're currently able to use multiple networks with hundreds of millions of connections and we're already seeing emergent behavior that can't be predicted. Every ten years gives about a factor of 100 increase in processing power and network complexity.

It will be interesting to see what emerges when thousands of networks with billions of connections interact.

9 comments:

Bret,

This is all tremendously interesting, but I must confess I am not all that impressed with this particular example.

Looking at the figures, it looks a bit obvious that particular mask used to mark the roads in the map, from the original picture, is leaving patterns in the background that could allow partial reconstruction of the original one afterwards.

I get the computer did so without being directly instructed, but these adaptative neural networks you ahve been showing us lately always do stuff without being directly instructed anyway...

Well, to me this emergent side effect is qualitatively different than anything else I had seen before. I agree that it's not as flashy as some of the other stuff.

I guess I'd never before seen anything that led me to believe that things such as intention and consciousness could arise from interaction of complex networks. Now I'm left wondering. Just a little bit, but still...

... the street view (b)

Why is it that the street view is so epically wrong? Without even trying, and in just a few seconds, I saw so many mistakes in the street view as to make it nearly worthless.

The results you see are in the middle of a development effort, which, at this point is clearly not working. Yes, the street view isn't great and it isn't able to reconstruct aerial view at all without cheating.

Again, the point is pretty much only the emergent behavior.

The point being that the synthetic aerial view is so bad that it would make anything else look genius by comparison.

Heck, all that would need doing is essentially differential analysis; no neural network needed.

Hey Skipper,

I'm not sure what your point is. That something was tried that didn't work? That because of that we can be sure that everything that's tried in the future won't work? Or what?

(b), and the synthetic aerial view ...

Which is f-ing awful. So awful that using mere wetware I could identify at least a half dozen serious errors in a matter of a few seconds.

That is my point? The task for a neural network becomes easier the worse the input is. The worse it is, the easier to find the mistakes.

And this is really, really bad. As in so bad I didn't know that kind of badness was even possible. Simple differential analysis (if memory serves, XOR every pixel, with some allowance for less than exact constitutes a match) would have yielded a far better (b) than that.

"What is my point? ..."

Sorry.

After so many years, you think that blogger could figure out an edit function.

Maybe it needs a neural network.

Post a Comment